پیکربندی و آپدیت VMFS و NFS

This post covers VCP6-DCV Objective 3.4 – Perform Advanced VMFS and NFS Configurations and Upgrades. Important storage chapter where you’ll learn the inside out about VMFS, datastores, management or enable/disable vStorage API for array integration.

For whole exam coverage I created a dedicatedVCP6-DCV page which follows the exam’s blueprint. If you just want to look on some how-to, news, videos about vSphere 6 – check out myvSphere 6 page. If you find out that I missed something in this post, don’t hesitate to comment.

VMware vSphere Knowledge

- Identify VMFS and NFS Datastore properties

- Identify VMFS5 capabilities

- Create/Rename/Delete/Unmount a VMFS Datastore

- Mount/Unmount an NFS Datastore

- Extend/Expand VMFS Datastores

- Place a VMFS Datastore in Maintenance Mode

- Identify available Raw Device Mapping (RDM) solutions

- Select the Preferred Path for a VMFS Datastore

- Enable/Disable vStorage API for Array Integration (VAAI)

- Disable a path to a VMFS Datastore

- Determine use case for multiple VMFS/NFS Datastores

—————————————————————————————————–

Thanks to Simplivity you can download this VCP6-DCV Study Guide as a Free PDF !!

—————————————————————————————————–

Identify VMFS and NFS Datastore properties

What’s Datastore? – it’s kind of a logical container which stores VMDKs of your VMs. VMFS is a clustered file system which allows multiple hosts access files on shared datastore.

VMFS uses locking mechanism (ATS or ATS + SCSI) which prevents multiple hosts from concurrently writing to the metadata and ensure that there is no data corruption. Check Page 149 for vSphere Storage guide for more on the ATS or ATS+SCSI locking mechanism.

NFS – Network file system, can be mounted by ESXi host (which uses NFS client). NFS datastores supports vMotion or SvMotion, HA, DRS, FT or host profiles (note that NFS 4.1 do not supports FT). NFS v3 and NFS v4.1 are supported with vSphere 6.0.

VMDKs are provisionned as “Thin” by default on the NFS datastore.

Identify VMFS5 capabilities

- Larger than 2TB storage devices for each VMFS5 extent.

- Support of virtual machines with large capacity virtual disks, or disks greater than 2TB.

- Increased resource limits such as file descriptors.

- Standard 1MB file system block size with support of 2TB virtual disks.

- Greater than 2TB disk size for RDMs

- Support of small files of 1KB. Ability to open any file located on a VMFS5 datastore in a shared mode by a maximum of 64 hosts.

- Can reclaim physical storage space on thin provisioned storage devices.

Upgrades from previous version of VMFS:

- VMFS datastores can be upgraded without disrupting hosts or virtual machines.

- If creating new VMFS datastore there is choice to create VMFS 3 or VMFS 5 version of datastore

- New VMFS datastores are created with the GPT format.

- VMFS datastore which has been upgraded will continue to use the MBR format until it is expanded beyond 2TB. If that’s the case then the MGS format is converted to GPT.

- Maximum VMFS datastores per host – 256 VMFS datastores

- Host needs to run ESXi 5.0 or higher

- No way back (VMFS 5 to VMFS 3) the upgrade process cannot downgrade back to VMFS v3.

Create/Rename/Delete/Unmount a VMFS Datastore

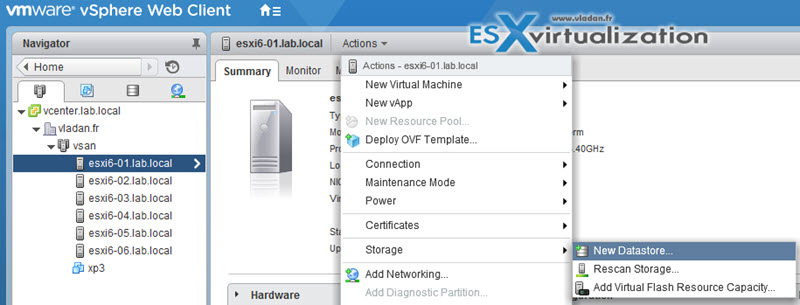

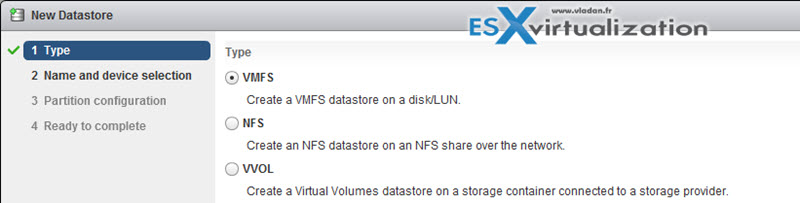

Create Datastore – vSphere Web Client > Hosts and Clusters > Select Host > Actions >Storage > New Datastore

And you have a nice assistant which you follow…

The datastore can be created also via vSphere C# client.

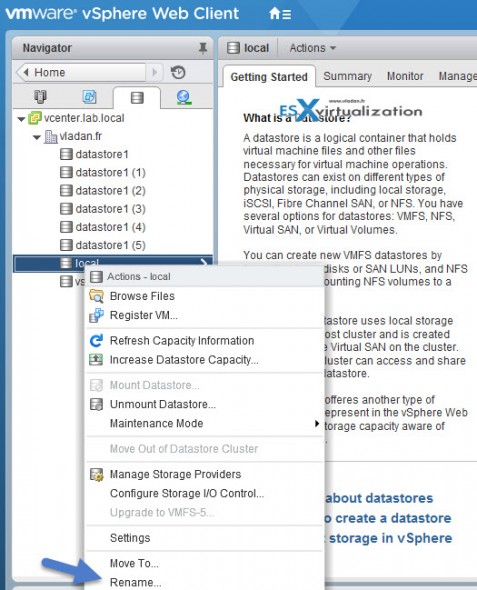

To rename datastore > Home > Storage > Right click datastore > Rename

As you can see you can also unmount or delete datastore via the same right click.

Make sure that:

- There are NO VMs on that datastore you want to unmount.

- If HA configured, make sure that the datastore is not used for HA heartbeats

- Check that the datastore is not managed by Storage DRS

- Verify also that Storage IO control (SIOC) is disabled on the datastore

Mount/Unmount an NFS Datastore

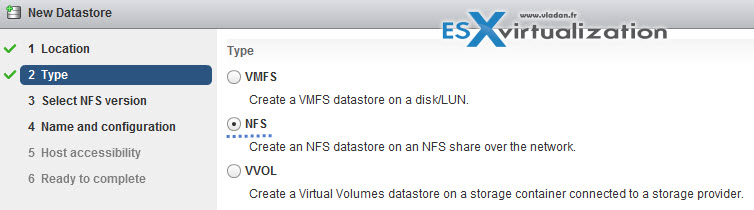

Create NFS mount. Similar way as above Right click datacenter > Storage > Add Storage.

You can use NFS 3 or NFS 4.1 (note the limitations of NFS 4.1 for FT or SIOC). Enter the Name, Folder, and Server (IP or FQDN)

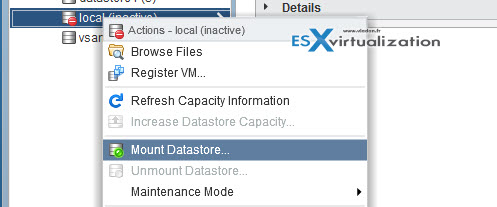

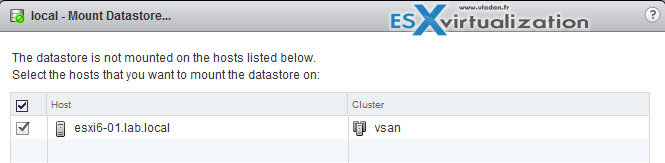

To Mount/unmout NFS datastore…

And then choose the host(s) to which you want this datastore to mount…

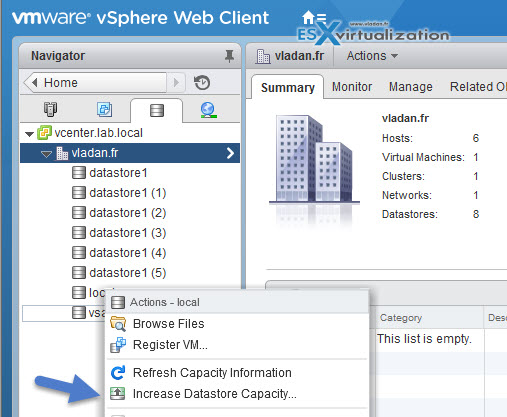

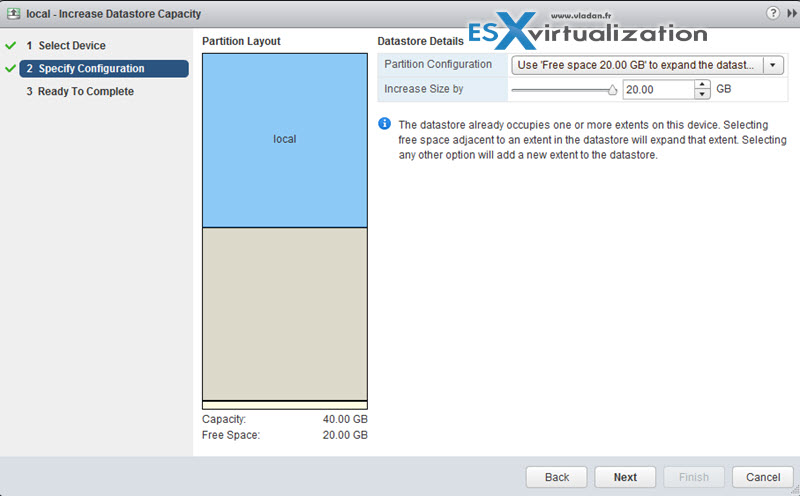

Extend/Expand VMFS Datastores

It’s possible to expand existing datastore by using extent OR by growing an expandable datastore to fill the available capacity.

and then you just select the device..

You can also Add a new extent. Which means that datastore can span over up to 32 extents and appear as a single volume…. But in reality, not many VMware admins likes to use extents….

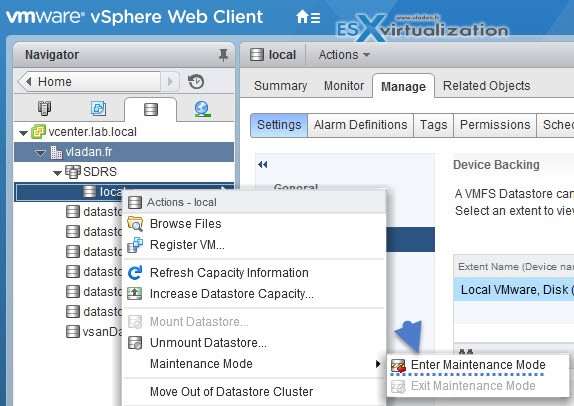

Place a VMFS Datastore in Maintenance Mode

Maintenance mode for datastore is available if the datastore takes part in Storage DRS cluster. (SDRS). Regular datastore cannot be placed in maintenance mode. So if you want to activate SDRS you must first create SDRS cluster by Right click Datacenter > Storage > New Datastore Cluster.

then only you can put the datastore in maintenance mode…

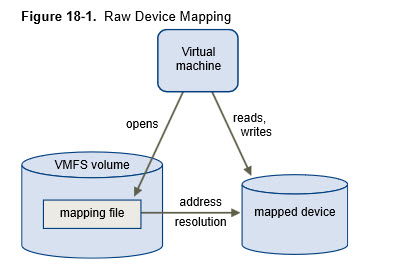

Identify available Raw Device Mapping (RDM) solutions

vSphere storage guide p. 203. RDM allows a VM directly access a LUN. Think of an RDM as a symbolic link from a VMFS volume to a raw LUN.

An RDM is a mapping file in a separate VMFS volume that acts as a proxy for a raw physical storage device. The RDM allows a virtual machine to directly access and use the storage device. The RDM contains metadata for managing and redirecting disk access to the physical device.

When to use RDM?

- When SAN snapshot or other layered applications run in the virtual machine. The RDM better enables scalable backup offloading systems by using features inherent to the SAN.

- In any MSCS clustering scenario that spans physical hosts — virtual-to-virtual clusters as well as physical-to-virtual clusters. In this case, cluster data and quorum disks should be configured as RDMs rather than as virtual disks on a shared VMFS.

If RDM is used in physical compatibility mode – no snapshoting of VMs… Virtual machine snapshots are available for RDMs with virtual compatibility mode.

Physical Compatibility Mode – VMkernel passes all SCSI commands to the device, with one exception: the REPORT LUNs command is virtualized so that the VMkernel can isolate the LUN to the owning virtual machine. If not, all physical characteristics of the underlying hardware are exposed. It does allows the guest operating system to access the hardware directly. VM with physical compatibility RDM has limits like that you cannot clone such a VM or turn it into a template. Also sVMotion or cold migration is not possible.

Virtual Compatibility Mode – VMkernel sends only READ and WRITE to the mapped device. The mapped device appears to the guest operating system exactly the same as a virtual disk file in a VMFS volume. The real hardware characteristics are hidden. If you are using a raw disk in virtual mode, you can realize the benefits of VMFS such as advanced file locking for data protection and snapshots for streamlining development processes. Virtual mode is also more portable across storage hardware than physical mode, presenting the same behavior as a virtual disk file. (VMDK). You can use snapshots, clones, templates When an RDM disk in virtual compatibility mode is cloned or a template is created out of it, the contents of the LUN are copied into a .vmdk virtual disk file.

Other limitations:

- You cannot map to a disk partition. RDMs require the mapped device to be a whole LUN.

- VFRC – Flash Read Cache does not support RDMs in physical compatibility (virtual compatibility is compatible).

- If you use vMotion to migrate virtual machines with RDMs, make sure to maintain consistent LUN IDs for RDMs across all participating ESXi hosts

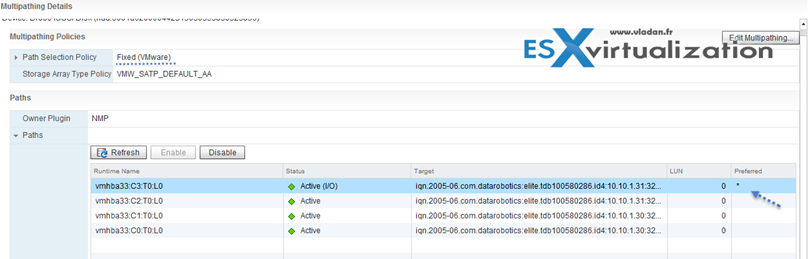

Select the Preferred Path for a VMFS Datastore

For each storage device, the ESXi host sets the path selection policy based on the claim rules. The different path policies we treated in our earlier chapter here – Configure vSphere Storage Multi-pathing and Failover.

Now if you want just to select preferred path, you can do so. Ifyou want the host to use a particular preferred path, specify it manually.

Fixed – (VMW_PSP_FIXED) the host uses designated preferred path if configured. If not it uses first working path discovered. Preffered path needs to be configured manually.

Enable/Disable vStorage API for Array Integration (VAAI)

You need to have hardware that supports the offloading storage operations like:

- Cloning VMs

- Storage vMotion migrations

- Deploying VMs from templates

- VMFS locking and metadata operations

- Provisioning thick disks

- Enabling FT protected VMs

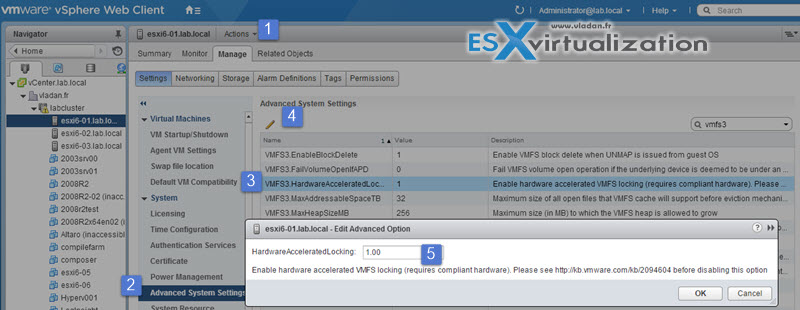

How to disable? or enable?

Enable = 1

Disable = 0

vSphere Web Client > Manage tab > Settings > System, click Advanced System Settings > Change the value for any of the options to 0 (disabled):

- VMFS3.HardwareAcceleratedLocking

- DataMover.HardwareAcceleratedMove

- DataMover.HardwareAcceleratedInit

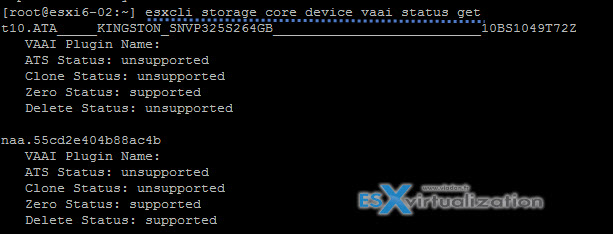

you can check the status of the hardware via CLI (via esxcli storage core device vaai status get)

or on the NAS devices with (esxcli storage nfs list).

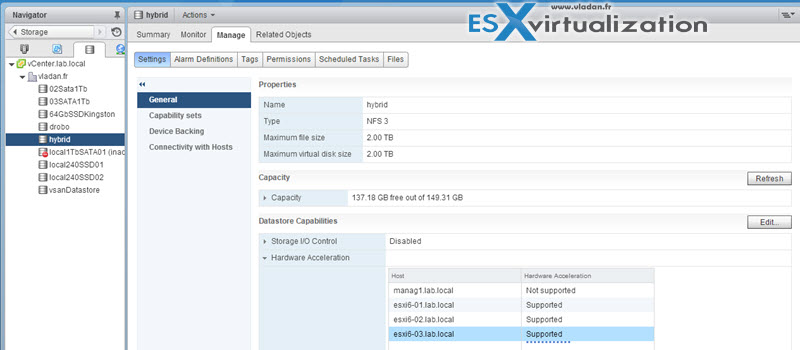

Via vSphere web client you can also see if a datastore has hardware acceleration support…

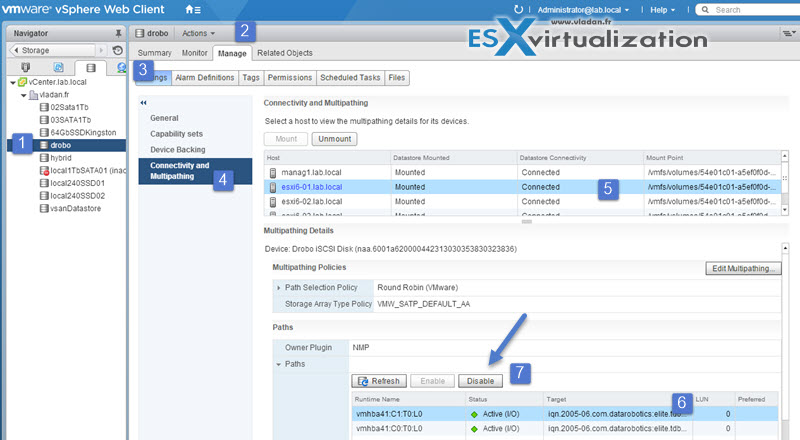

Disable a path to a VMFS Datastore

It’s possible to temporarily disable storage path, for example for maintenance reasons. Check Storage Paths in the vSphere Storage Guide on p 192.

One can disable the path from through the web client from the datastore view OR storage device OR adapter view.

Determine use case for multiple VMFS/NFS Datastores

Usually the choice for multiple VMFS/NFS datastores are based on performance, capacity and data protection.

Separate spindles – having different RAID groups to help provide better performance. Than you can have multiple VMs, executing applications which are I/O intensive. If you make a choice with single big datastore, than you might have performance issues…

Separate RAID groups. – for certain applications, such as SQL server you may want to configure a different RAID configuration of the disks that the logs sit on and that the actual databases sit on.

Redundancy – You might want to replicate VMs to another host/cluster. You may want the replicated VMs to be stored on different disks than the production VMs. In case you have failure on production disk system, you most likely still be running the secondary disk system just fine.

Load balancing – you can balance performance/capacity across multiple datastores.

Tiered Storage – Arrays comes often with Tier 1, Tier 2, Tier 3 and so you can place your VMs according to performance levels…