نحوه شناسایی Isilon NFS Datastore ها در VMware vSphere

As one of the more Isilon-centric vSpecialists at EMC, I see a lot of questions about leveraging Isilon NFS in vSphere environments. Most of them are around the confusion of how SmartConnect works and the load balancing/distribution it provides.

Not too long ago, a question arose around mounting NFS exports from an Isilon cluster, and the methods to go about doing that.

Duncan Epping published an article recently titled How does vSphere recognize an NFS datastore?

I am not going to rehash Duncan’s content, but suffice to say, a combination of the target NAS (by IP, FQDN, or short name) and a complete NFS export path are used to create the UUID of an NFS datastore. As Duncan linked in his article, there is another good explanation by the NetApp folks here: NFS Datastore UUIDs: How They Work, and What Changed In vSphere 5

Looking back at my Isilon One Datastore or Many post, there are a couple ways to mount NFS presented datastores from an Isilon cluster if vSphere 5 is used. Previous versions of vSphere are limited to a single datastore per IP address and path.

Using vSphere 5, one of the recommended methods is to use a SmartConnect Zone name in conjunction with a given NFS export path.

In my lab, I have 3 Isilon nodes running OneFS 7.0, along with 3 ESXi hosts running vSphere 5.1. The details of the configuration is:

- Isilon Cluster running OneFS 7.0.1.1

- SmartConnect Zone with the name of mavericks.vlab.jasemccarty.com

- SmartConnect Service IP of 192.168.80.80

- Pool0 with the range of 192.168.80.81-.83 & 1 external interface for each node

- SmartConnect Advanced Connection Policy is Connection Count

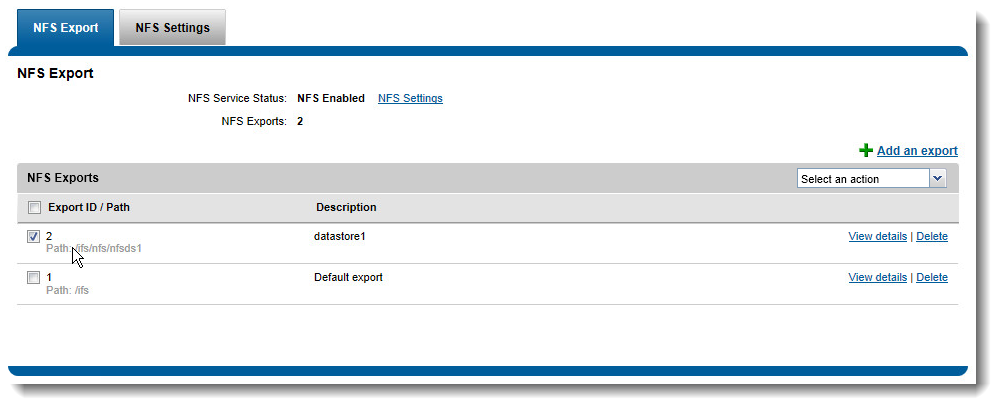

- NFS export with the following path

- /ifs/nfs/nfsds1

- vCenter Server 5.1 on Windows 2008 R2 with Web Client

- 3 ESXi hosts running vSphere 5.1

A quick note about the mount point I am using… By default an Isilon cluster provides the /ifs NFS export. I typically create folders underneath the /ifs path, and export them individually. I’m not 100% certain on VMware’s support policy on mounting subdirectories from an export, but I’ve reached out to Cormac Hogan for some clarification. Update: After speaking w/Cormac, VMware’s stance is support is provided by the NAS vendor. Isilon supports mounting subdirectories under the /ifs mount point. Personally, I have typically created multiple mount points giving me additional flexibility.

One of the biggest issues when mounting NFS datastores (as Duncan mentioned in his post), is ensuring the name (IP/FQDN) and Path (NFS Export) are named the same across all hosts. **Wake up call for those using the old vSphere Client- It is easier from the Web Client.**

If I mount the SmartConnect Zone Name (mavericks.vlab.jasemccarty.com) and my NFS mount (/ifs/nfs/nfsds1) it would look something like this:

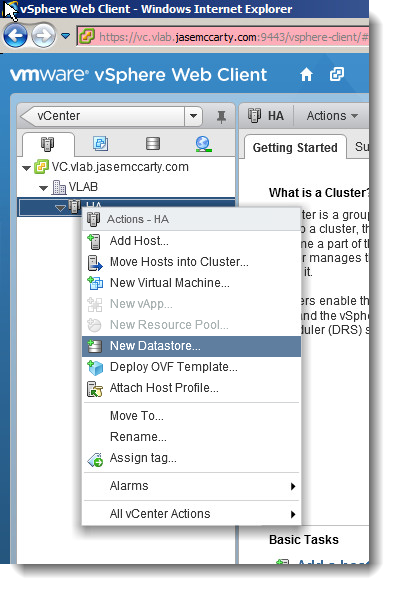

Begin by adding the datastore to the cluster (Named HA here)

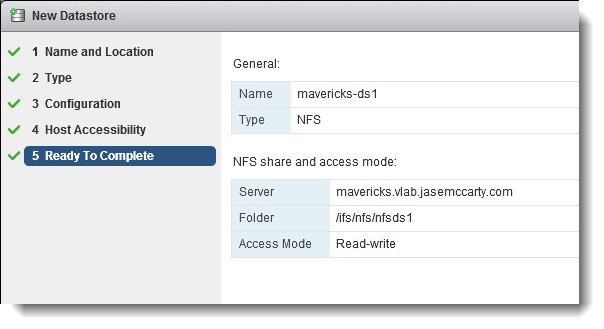

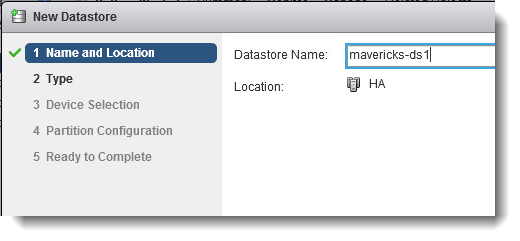

Give the datastore a name (mavericks-ds1) in this case.

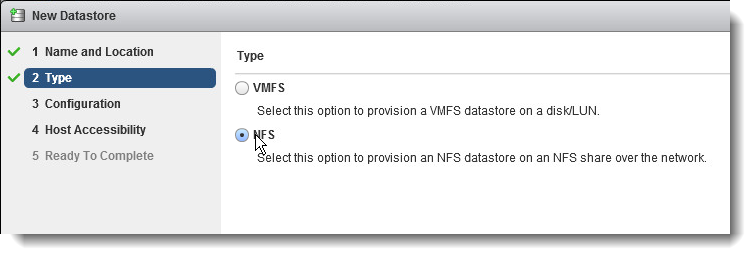

Select NFS as the type of datastore

Provide the SmartConnect Zone name as the Server, and the NFS Export as the Folder

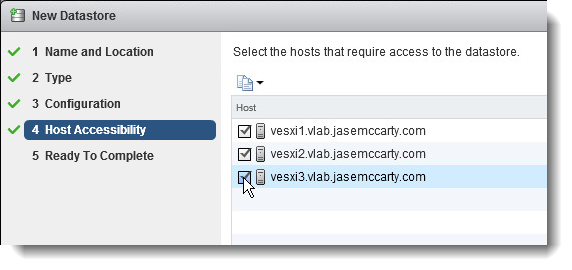

Select all hosts in the cluster to ensure they all have the datastore mounted

(this will ensure they see the same name)

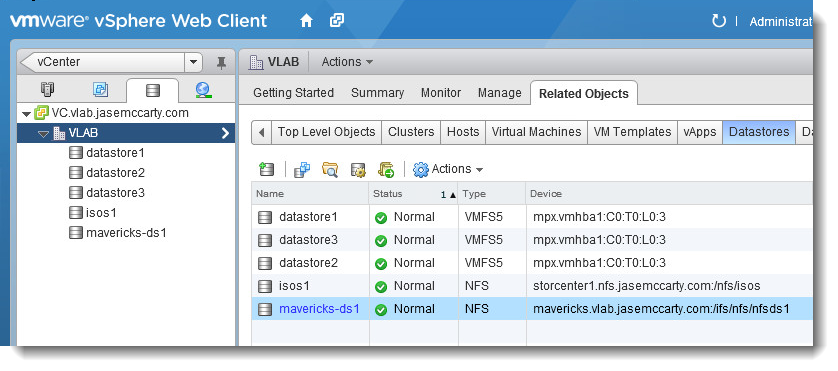

Now only a single NFS mounted datastore (with the above path) is mounted

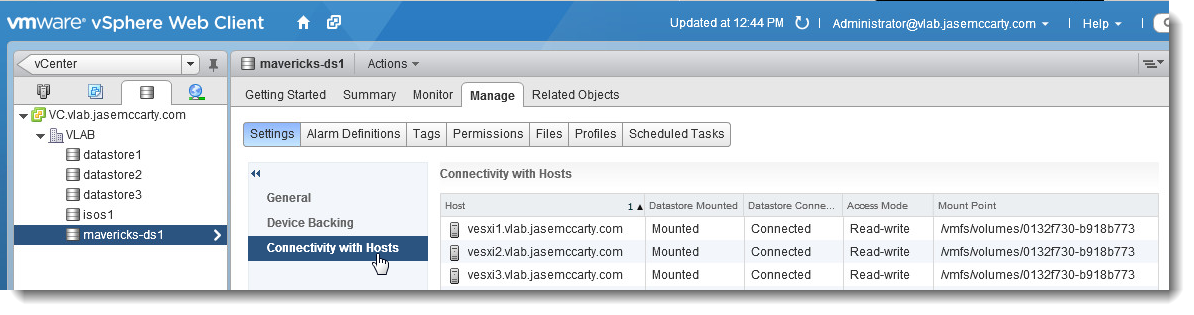

Looking further, each host sees an identical mount point.

This can also be confirmed from the ESXi console on each host.

This can also be confirmed from the ESXi console on each host. This would lead one to believe that all hosts were talking to a single node. But are they?

This would lead one to believe that all hosts were talking to a single node. But are they?

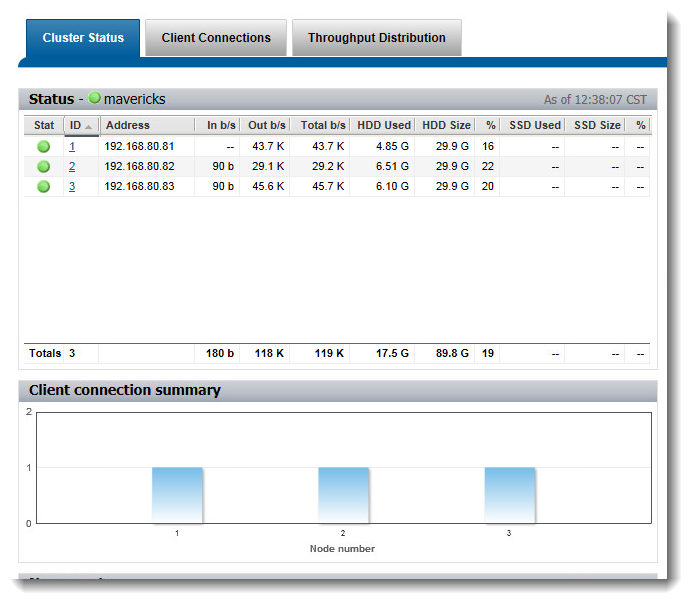

Looking at the OneFS Web Administration Interface, it is shown that each of the 3 hosts has a separate connection to each of the 3 nodes

Because each of these hosts see the same mount point, SmartConnect brings value by providing a load balancing mechanism for NFS based datastores. Even though each host sees the IP for the SmartConnect Zone differently, they all see the mounted NFS export as a single entity.

Which Connection Policy is best? That really depends on the environment. Out of the box, Round Robin is the default method. The only intelligence there is basically “Which IP was handed out last? Ok, here is the next.” Again, depending on the environment, that may be sufficient.

I personally see much more efficiency using one of the other options, which include Connection Count (which I have demonstrated here), CPU Utilization (gives out IPs based on the CPU load/per node in the cluster), or Network Throughput (based on how much traffic/per node in the cluster). Each connection policy could be relevant, depending workloads.

Another thing to keep in mind, when using a tiered approach, and SmartConnect Advanced, multiple zones can be created, with independent Connection Policies.

Hopefully this demonstrates how multiple nodes, with different IP addresses, can be presented to vSphere 5 as a single datastore.