کنترل پهنای باند vMotion در حالت Multi-NIC با استفاده از Traffic Shaping

Traffic Shaping is most likely one the least used features I’ve seen on a virtual switch. It had little use in traditional “porcupine host” design with a large quantity of 1 GbE links tethered to a rack mount server. Typically, each link carried discrete traffic and was paired with a buddy link for failover. However, the introduction of cheaper 10 GbE links, converged infrastructure, and multi-NIC vMotion capabilities has muddled things a bit. In these situations, it becomes vital to control the bandwidth of vMotion when multiple hosts are sending virtual machines to a single target or destination.

Network I/O Control (NIOC) gets a lot of attention because it controls the ingress of traffic, which is a fancy way of saying the generation of traffic from the host. NIOC manages the host’s network traffic to ensure it does not exceed the defined network resource shares and limits, but does not control traffic destined to the host, which is termed egress. This gives Traffic Shaping another hope for the spotlight because it has the ability to artificially limit traffic based on its characteristics in both ingress and egress use cases. In the case of a vSwitch, it is defined at the switch or port level, and for a VDS it is defined on a port group.

Defining Ingress vs Egress

Finding the terms ingress and egress a bit hard to understand? These terms are both stated from the perspective of the virtual switch in relation to the host objects: vmkernel ports and virtual machines. Ingress traffic is entering the switch from a host object. Egress is any traffic destined to leave the switch for a host object. I’ve also created a graphic below:

Note that the uplinks and the northbound network is not referenced. Traffic that enters the host from the physical network and is sent to a vmkernel port or virtual machine would be egress traffic from a Traffic Shaping perspective.

Configuring Traffic Shaping for vMotion

First, let me go over the problem. With source based ingress traffic shaping, or NIOC, you have control over how much traffic a single host can generate to another host. Most of the time this is fine, because you typically have one host performing one or many vMotions to other hosts in the cluster. And, as long as you only have a single host doing vMotions, you’ll never hit any snags because you’ve limited that host from saturating the uplinks via NIOC or ingress traffic shaping.

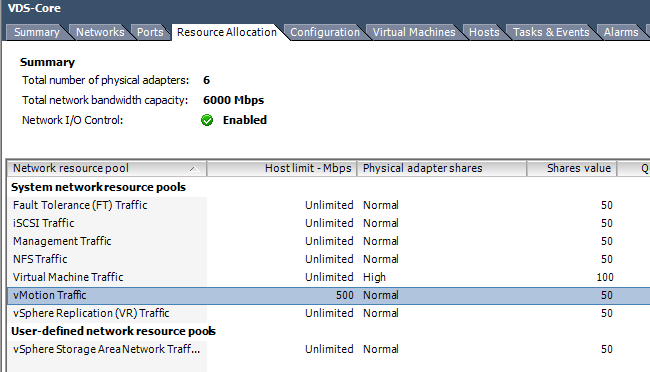

Here’s a sample configuration from the Wahl Network homelab. I’ve configured NIOC on my main VDS, named VDS-Core, to limit vMotion at 500 Mbps.

Want to know more about setting host limits using NIOC? Check out my “Testing vSphere NIOC Host Limits on Multi-NIC vMotion Traffic” post.

This is half of my uplink’s 1 GbE speed and is really there just for demonstration purposes – I’m not suggesting you set it so low in a real environment.

This will effectively limit my host to 500 Mbps, per uplink, when sending out vMotion traffic.

NIOC Isn’t Enough

The NIOC host limit is a false sense of security. The case still exists where multiple hosts could vMotion workloads over to a single target host. The source hosts would be limited to 500 Mbps, but the destination host would receive N x 500 Mbps of traffic, where N = number of hosts sending vMotions. Obviously the line rate of the physical uplinks would come into play, because it’s impossible to go faster than the speed of the connection.

Example 1 – No Traffic Shaping, Multiple Host vMotion to Single Target Host

In this test, I’m sending very active VMs from ESX0 and ESX1 over to ESX2. Each host has a pair of 1 GbE uplinks and is configured with multi-NIC vMotion. NIOC is set to limit vMotion at 500 Mbps. Here’s an ESXTOP grab showing the traffic:

The vMotion traffic has well exceeded the 500 Mbps limit on ESX2 and is roughly saturating both 1 GbE uplinks.

Example 2 – Traffic Shaping Configured, Multiple Host vMotion to Single Target Host

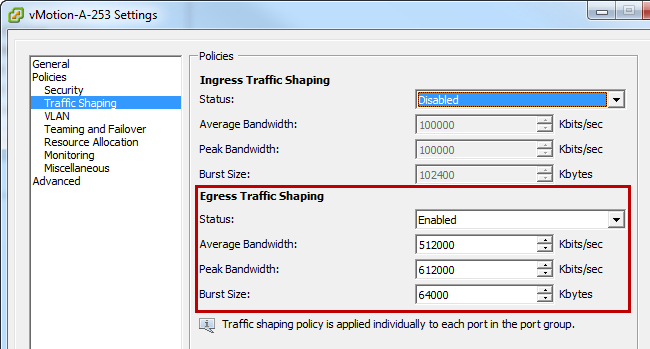

In this example, I’ve configured egress Traffic Shaping on both of my vMotion port groups.

- Average Bandwidth: 512,000 Kbits/sec – the average bandwidth that traffic shaping will shoot for. Any dips below this will accumulate as a credit to allow bursting.

- Peak Bandwidth: 612,000 Kbits/sec – this is the maximum throughput I want the port group to hit, which I’ve set to about 20% higher than my average of 500 Mbps. I like to leave a little flexibility. Optionally, you could set the peak value to the average value to avoid any bursting.

- Burst Size: 64,000 Kbytes – this is a throughput measure of how long I can go beyond the average bandwidth and burst to the peak. If you set the peak value and average value to the same number, burst becomes irrelevant.

- Luc Dekens (@LucD22) has a great graphic of this on his website!

Just like with the previous test, I’m sending very active VMs from ESX0 and ESX1 over to ESX2. Each host has a pair of 1 GbE uplinks and is configured with multi-NIC vMotion. NIOC is set to limit vMotion at 500 Mbps and egress Traffic Shaping is configured as shown above. Here’s an ESXTOP grab showing the traffic:

Much better! I’m receiving right around 500 Mbps of traffic.

Thoughts

Traffic Shaping is a relatively easy and proven method of making sure that vMotion traffic does not saturate the destination host’s uplinks. I find this to be more relevant now that multi-NIC vMotion is becoming popular, because you now have a possibility where multiple uplinks could be bombarded with vMotions.